So when you change the pressure below it pushes up on the diaphragm and changes the pressure above. But the media never actually comes in contact with each other. This works great on a higher pressure oil system. A trap is like this device below that has the clear acrylic walls. So your device on your test is connected to the top, and your reference is connected below, for the debris to go from the device on your test to the main unit. It's going to go up or go down and back up and back down again. And that acts as a gravity trap that will keep the debris from reaching the standard.

Back to Top of Page ↑

So finally that brings us to the question, how do we decide if we do our work in the field or in the lab? We need to consider a few questions:

So in conclusion, pressure calibrations can be challenging, but these challenges can be overcome with the right processes and the right equipment. So I will turn it over to Nicole now to go over any questions.

Ok, before we go into the questions, I just wanted to make everyone aware of a special limited time offer that's going on right now with Fluke and Fluke Calibration. There's a gift with purchase offer where for purchases over $250, there's a tiered gift with purchase. There's seven tiers, starting at $250, and I think the top level might be over $10,000 purchases. But you can choose the gift with purchase, valued at up to $1200. So if you'd like details on that, you can go to Transcat.com/deals.

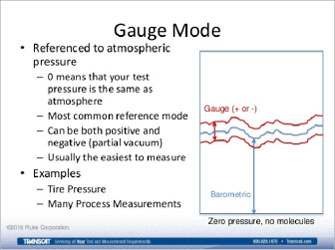

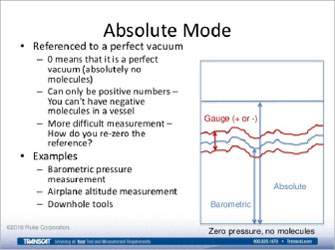

1. What is the difference between gauge and bi-directional gauge?

Ok, you also see the term bi-directional gauge, and that simply means that it can read both negative, so subatmospheric pressures, as well as gauge pressures, as well as positive pressures. So gauge mode often times can mean just zero and above. It's how some companies will consider it. Whereas, bi-directional means that it would read from say -5 to +5 PSI, or -15, -14.7 up to 15 PSI.

Back to All Q&As ↑

2. Can relative humidity affect pressure calibration?

Ok, so the question is can relative humidity effect pressure calibration? Well, at some level just about anything can affect pressure calibration. So the short answer is yes. How it can depends on the instrumentation you're using. There are some measurement devices, some pressure sensors that are susceptible to humidity, where it's moister then they may give a different reading. Another thing is the humidity, a very dry climate, for example, could possibly affect the electronics that are being used inside the instrument. Or another example is that a dead weight tester is impacted by the density of the air, which is impacted somewhat by the humidity. Just about every instrument will have a humidity specification, saying, ok, the humidity must be within this range and normally non-condensing for the instrument to work correctly. So you should pay attention to those situations.

Back to All Q&As ↑

3. When a device under test doesn't define the inches of water temperature, what is the industry standard temp?

So first off, there's a measurement unit called inches of water, and it's based off of pretty much the same phenomenon as head height, in that pressure can be defined as the density of the fluid, times the height, times gravity. And the pressure unit inches of water are the pressure that's associated with a calm water that's one-inch-high times standard gravity times the density of water. Well the density of water is not constant. It is different depending on the temperature of the water. So while you have this unit measurement called inches of water, you have in reality, you have three or four different versions of it, where it's inches of water at different reference temperatures. The most common ones would be like 4 degrees Celsius, because that's where the temperature of the water is most well-known and that's where it's the highest density. Or also 20 degrees Celsius, that's a very common reference temperature, especially for dimensional measurements. So the question is if the device just says inches of water and doesn't say what the reference temperature is, then which one do you choose and is there an industry standard? To the best of my knowledge, there is not an industry standard across the multiple different vertical industries and stuff. You'll see perhaps in like the natural gas industry, I think it's more of a 23 degrees C, but I'm not a hundred percent positive on that. And other industries will pick others. I would say always contact the manufacturer of the device and find out. If they don't know it may mean the difference in the densities is such that it's not going to impact the overall, that the accuracy of the device is for lack of a better word, so bad, that the differences in density won't matter. But some people are just also not aware of the situation. I would suggest that your laboratory set up a policy that you always use the inches of water that's defined by the manufacturer. So especially if it says what it is, you make sure you match that on your reference. If it's not defined, then you pick one as your definition. Personally I'm a big fan of 4 degrees C. And that on your calibration reports where you're using inches of water, that you define what the measurement conversion factor is that you used. So each of these, while their history and definition is based off of a calm of water, is not very frequently that they're actually measured with a calm of water anymore. They're using some sort of electronic sensor and so forth, and it's just converting from say Pascal to inches of water. So if you include what the conversion factor is, then everybody knows ok, here's how to get back to a real measurement.

Back to All Q&As ↑

4. If gauges are going to be used at ambient temperatures, should they be calibrated at that temperature or under temperature control?

So the more general question is, do you need to calibrate a device at the same temperature where it's going to be used?

Generally speaking, you can calibrate the device at ambient temperature and be ok. And not necessarily at a control temperature. When we manufacture a new device, we will characterize it at multiple temperatures. So we'll put it in an oven, run our environmental chamber, run it at low temperatures, high temperatures, look at the pressure output, add those different temperatures, install a bunch of coefficients into the device and correct for that. The calibration is then put on top of that as the final characterization or the final calibration of the device. For most devices, the behavior with temperature doesn't change with time. So the overall behavior of the device will change, it will drift, so you can calibrate it at one temperature and know it will work at all the temperatures within its range. There are situations depending upon the design of the device, where the calibration instructions will specifically say it needs to be calibrated at a specific temperature, plus or minus X number of degrees. And they do that because that final calibration run is assuming that it's being used, or requires it be used at that particular temperature so that you don't end up having the temperature effects in that final calibration run, but those stay as part of the compensation process. So generally speaking, pressure calibrations are oftentimes, when you calibrate a device on just an ambient temperature, always check the user manual of the device you're calibrating and make sure that that's ok. It's rare that as part of the calibration process that you'll need to put it in an environmental chamber or something like that to keep it at a constant temperature.

Back to All Q&As ↑

5. When calibrating to 87,250 PSI, is a dead weight tester applicable?

There are dead weight testers that will go up to that high of pressure. I can't speak to them and their usability as we don't actually... The highest that we manufacture up to is approximately 75,000 PSI. But you will see a particular design of dead weight tester that can go to that high of pressure. Not very common though.

Back to All Q&As ↑

6. You mentioned gravity as a consideration when calibrating. With a distance of only a hundred miles, that was your example. How different is the gravity between two places?

Yeah, so how different is gravity between two places? It really depends because if it's a function of altitude, the distance from the equator, and geological conditions. So it's possible that you can find two locations very far apart that have similar gravities. But at the same time, if you're going from the south to the north and you're going up a mountain or there's a geological formation difference, then you can see a very large difference in the acceleration of gravity. There are a couple of websites available, one that's specifically for the United States, by the National Geological Society, I believe. And then also one done by PTB in Germany for the entire world, where you can put in the latitude and longitude and altitude of a location or elevation of a location, and it will provide you with the estimate of the local gravity of that location. So in your particular case, you can put in the two different locations that you're interested in, be it your shop versus a hundred miles down the road, and see how different those two are. To find those tools, obviously you can search the internet. But if you go to FlukeCal.com, we have an application note that talks about this and provides links to those two schools.

Back to All Q&As ↑

7. What method would you recommend for sourcing low differential pressure? For example, .25 inches of water column.

So low differential pressure. .25 inches of water column. To give everybody reference, that's a quarter inch of water, that's definitely a draft range pressure measurement, one where the wind in the room, if somebody sneezes, you can possibly see it in your pressure measurement. A question is well how do you source that kind of pressure? There's different tools that are out there to do that. You can use a small variable volume. There's also pressure controllers that are capable of doing that. Example is one from a company called Cetra. There's a portable device that's capable of providing a stable pressure. We also, we manufacture laboratory devices that can provide very stable pressures at that sort of pressure range. There's a whole set of challenges associated with having a stable low draft range pressure like that. Cause like I said, if somebody sneezes, that will cause your pressure to move around. So you need to make sure that your reference is isolated from atmosphere, so that when somebody opens the door, it doesn't change your pressure. And look at ways to perhaps, one, increase the volume on the reference side and on the test side, but also make sure those volumes are more or less the same, so as the temperature changes, it affects both sides equally. Those sort of tools, you can use a manual device, as I said, a variable volume, where it's just changing the volume in the system a slight bit. Or there's also automated pressure controllers available that can make it easy to do that.

Back to All Q&As ↑

8. What should the accuracy of the reference instrument be in comparison to the test instrument?

Ok, so that is a very wide open question, as well. So what should the accuracy of the reference be in comparison to the test instrument? So normal calibration, you've got a device that you're testing and you're going to have some device that's more accurate than your reference. And the question is well how much more accurate does it need to be? There's not a proper answer I can give in thirty seconds or less. There are some rule of thumbs that have been used historically. At one time a rule of thumb was something like 10-1, but it's much more common now to see 4-1. So if your device on your test is plus or minus 0.1, then your reference would need to be something like 0.025. So it's 4-1. The long answer, the more correct answer is you'll need to do an analysis of the uncertainty associated with the two devices and consider that in your overall determination. There are situations, and 4-1 happens to be a situation where statistically, the uncertainty, the accuracy of your standard, has minimal impact upon the accuracy of your device in your test. There are situations where lower than 4-1, 3.5-1, 3-1, 2.5-1 is actually acceptable and very common. So it shouldn't just be immediately discounted as not being acceptable, but should be evaluated for the actual application. If you have a mission critical measurement, one that if the device is out it's going to result in a large amount of recall or potential safety hazard, then you may want to err on the side of caution and have a higher test ratio.

Back to All Q&As ↑