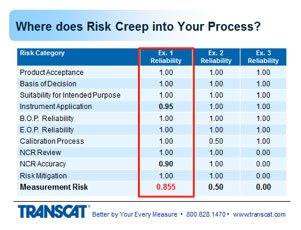

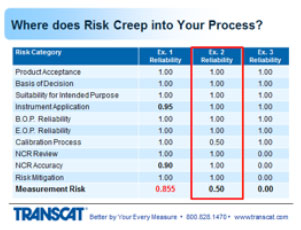

Where Can Risk Impact Measurement Assurance?

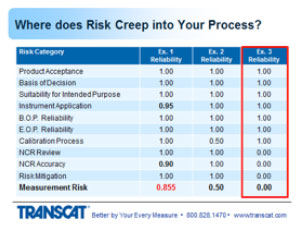

So let's talk about a couple of these different areas of risk and how to keep the measurement assurance high.

1. Suitability for intended purposes is group number three or item number three. Means that the instrument that was selected measures the right parameters, and by parameters I mean voltage, pressure, temperature, whatever multiple or singular parameters are being measured on the product. Provides the valid measurement results, meaning the instrument is accurate and stable enough to provide useful information. Can sustain the environmental conditions to which it's exposed. And during its use. And still provide valid information. And it's user friendly at a level commensurate with the experience of the operator so they're not introducing errors inadvertently.

2. Moving to instrument application, this means the instrument being used as designed backed by the OEM. Incorrect usage induces errors into the measurement process or can introduce errors. Which will change the outcome of the harder product. Change your decision on whether it's in or out of tolerance. So I'll run through a few examples of this.

a. SPRT with an indicator entering the wrong co-efficients in the display can cause temperature readings to be off or will cause temperature readings to be off, and sometimes that can be very significant. There are a lot of - there are ways to prevent that, now, like using intercon connectors that automatically transfer the coefficients into the readout. But if you don't have those, that's something to be careful of. If you get the wrong co-efficients assigned or if you have the old co-efficients assigned to the newly calibrated probe, you're going to get the incorrect temperature values.

b. Calipers. If you drop calipers and cause a knick or a burr on the jaws, that's going to cause interference with the measurement and cause a shift or an offset. Depending on how big the knick, or the burr is, can be significant. Could be minor, but the fact is if you dropped an instrument and you suspect there may be any problem, either use a check standard to verify that there is no issue, or send it back for calibration to confirm.

c. The torque wrench. Significant operator error can be introduced. Some of the gauge R&R studies that I've done both with customers and within TRANSCAT, show typical error - operator errors up to 3 or 4%. But it can be as bad as 10% depending on the application and the range of torque, low end torque drivers can have higher percentages. And so even if the calibration of the torque wrench shows that the instrument is well within the manufacturer's specifications for performance, the operator can then introduce significant error beyond that in torqueing fasteners. Obviously, that's another way where risk can creep into the process, not necessarily from the calibration.

d. Dry Block calibrators or dry wells using the wrong sized insert for the size of the temperature probe, obviously is going to give some cooling and not going to transfer the heat as uniformly as it should. There are actual and radial uniformities, and by those I mean from well to well, one point to another, is an axial uniformity. I'm sorry, radial uniformity. An axial uniformity is top to bottom in the well. Overlaying the block with too many probes inserted can also cool it off and cause errors. So the use of the instrument is critical to maintaining good measurement. (See Our Webinar on Dry Block Calibration)

e. An oscilloscope, lack of understanding bandwidth. Another example. Using the instrument above the frequency cut-off for bandwidth will provide a reduced amplitude value. And then while you may think that you have a good reading on that scope, for the amplitude, you may have a value that's much lower based on what's going on with the bandwidth cut-offs.

3. The beginning of period reliability. Here's some things to think about. When you order an instrument, you put it right into production without having it calibrated first. I've seen that happen. People that use p-cards and don't run it through the calibration system. Or calibration is not aware of - of the newly purchased instrument. And the instrument is being used to make decisions. If you relied on the production certificate for calibration, that's not always a guarantee that the instrument is performing within the manufacturer-stated specs. Sometimes that simply just a statement that the instrument went through an operational check or a projection test. And guarantees that it's functional, not necessarily calibrated. Was the initial OEM cal accredited through 17025? Very often this is not the case.

Transcat offers New Instrument Calibration to help mitigate your measurement risk

And there are other errors that can be introduced that are not accounted for in the calibration. And then for re-calibration of an instrument, not the new cal, but subsequent cals, whether the instrument returned to you in a condition of close to being out of tolerance, did it come back from the lab and some of the readings are very close to the tolerance limit? Meaning in that period of time for the next calibration interval, it has a chance of going outside of those acceptable limits. And what would that do to your decisions about product? Or wherever the instrument is used.

4. And then end of period reliability which is the most critical information that assesses the status of the instrument and tells you whether or not the decisions you’ve been making with that instrument were good or bad. If the instrument was found out of tolerance, then obviously you've got some evaluation to do to determine whether those out of tolerance readings impacted your decisions. Was it found to be in tolerance but again close to being out of tolerance? There are reasons where intolerance situations could cause risk in the measurements for the product. What impact does the lab's uncertainty have on this condition? And that's what I'm getting at. You can have an in tolerance condition, the uncertainty of the laboratory's measurement then could have given a false accept reading in that region, and they should have called it out of tolerance, there are other webinars that get into more details with that in - that information if you're interested. But that's something to consider. How good is the lab's measurement in determining the instrument's value. And how do you apply all that information to use the instrument during its last usage period? How do you take those readings whether close to being out of tolerance or absolutely out of tolerance, and apply that back to the places where the instrument was used over its last cal interval to determine whether those offsets in the instrument had any impact on the decisions that were made?

Back to Top of Page ↑

Risks in the Calibration Process

So let's talk about the calibration process and where risk creeps into that. One of the measurement parameters, functions, on the instrument that's being submitted for calibration, by their multiple ranges or values on the instrument.

- What's the accuracy of each range and value?

- Is the lab that you're submitting it to capable of performing the calibrations for all of those parameters, functions, ranges or values for that instrument?

- How does their measurement uncertainty compare to the tolerances of the instrument? This is where we get into ratios. Whether it's an accuracy ratio or uncertainty ratio. Here we're talking about uncertainty of the lab, so it would be an uncertainty ratio where you're comparing the tolerance of the instrument at each of its different readings, to the uncertainty of the laboratory at each of those different readings.

- And is the lab providing an uncertainty that results in a meaningful or valid ratio? Now that the acceptable ratio based - is going to be based on what your standard operating procedures are for your company, most of the time that's 4:1 or better. Sometimes it's different levels. So looking at those ratios, if they're very close to one another or what we would call a 1:1 ratio, then that's going to increase the amount of risk for false acceptance of that calibration measurement value, which then could impact the readings on the instruments or product where the instrument is used.

- What test points did the calibration lab check on the instrument compared to what you're expecting, this should be checking based on supporting the application where the instrument is used.

- What tolerances did they apply?

- Are they the same as the ones stated by the OEM or stated by you if it's a custom panel?

- If you decided to use tolerances that are different than those stated by the OEM, in other words a custom cal, does your cal provider know this and have they applied that to your calibration?

This all ties together to suitability of the instrument and understanding how that is acceptable for the application where the instrument will be used in your process and making sure the cal lab is supporting the test points and tolerances that are needed to keep that suitability decision intact and not affect your product decisions.

- Technician competency - for the person that does the calibration. How would you know if they're competent? It's really your supplier's or your internal lab's job to make sure the technician is competent. With [Inaudible 20:25] 17025 is one process where competency is assessed independently.

- What about the cal report that you receive? Is that easy or difficult to understand? Is it laid out well? And easy to apply?

Back to Top of Page ↑

Calibration Non-Conformance Reviews

So going back to impact of your processes, the cal results, if there's an out of tolerance that flags that you need to do something else with that data, now, to determine whether there is an impact in your quality system, it can save money by preventing safe - safety problems from occurring and prevent negative margin results from bad product being released. So the NCR review is very critical for that reason. And it's the whole point of the traceability chain. NCR review is often under-executed in my experience from what I've seen across a number of companies that I've done internal audits and reviews. And it gives the false perception that there's no impact to the product. We offer metrology consulting in this area to help engineers and quality personnel understand how to do this properly and thoroughly and to get the most out of the impact evaluation.

So here's a question that I usually throw out to my clients when I'm performing this consulting. If you look over the past five year period, how many out of tolerance NCR evaluations have you had to perform? And roughly what percentage of the number of calibrations in a year does that come out to be? Is it a very low percentage? Is it a moderate percentage? Is it a surprising percentage? If it's high then you may have issues with the instrumentation in your inventory.

If it's relatively low, then it seems like things are being held under control, but again, that goes back to making sure the calibrations that are being performed are supporting the applications of the instrument.

So of the out of tolerance NCRs, how many of those had a negative impact on the product? And if the answer is 0 or very near 0, then it could be for one of two reasons. You either have a very robust program - quality program that makes sure there's enough buffer between the instrument tolerances for calibration and the product acceptance tolerances. And I've seen that in many cases. But if it's near zero for another reason, it could be because you're not having good, thorough NCR out of tolerance impact reviews. So if it seems to be too good to be true, then be sure you understand why it's so low and then consider, also, the quality of the NCR evaluations being performed. And if you need help with that, ask for help. What you don't want is an external auditor picking up on that and finding out that you have never experienced a problem with out of tolerance impacts and when - then they start digging into the reviews to see how well they were done. Or looking at the calibrations to see if those were done properly.

Back to Top of Page ↑

Risk Mitigation in Your Measurement Assurance Program

So with risk mitigation in your process. If you discover a non-tolerance that may have negatively influenced the product decisions, consider the fact that if the out of tolerance caused you to reject good product, this is after you've done your NCR review, then it's in your best interest to determine how much this costs your company by causing scrapped product, causing rework of product, or the - just the cost of having to perform the out of tolerance evaluations.

The other end of that is if it caused you to accept bad product, then you need to determine if there's a consumer perception issue because that product is out there and customers are noticing it. Or if you actually have lawsuits or safety issues resulting in property damage, medical cost, or fatalities. This is what you want to avoid by taking risk out of your processes and having good measure of insurance that leads to good decisions about product.

Determining root cause to reduce or prevent these costs from recurring is not only a good corrective action, but if you're doing this ahead of time, a good preventive action. In the end running your business is not just about policies. It's not just about mandates. It's about making a safe, reliable, superior product that fills a need in the market and makes it profitable for your company. You reduce profits if you have things that are happening that shouldn't be happening, false accept, false reject, that are causing your resources to have to re-do work as opposed to producing new work. Measurement assurance you design in your process with these ten areas to help you minimize risk in making those decisions about the process or about your product safety and quality. Too often, not all of these ten categories are implemented causing reliability issue. Causing your measurement assurance program to lose value and become ineffective. And if you're going to put the money and time into some of it, why not put it into all of it? Make it make sense. Make it result in keeping your cost and safety where it needs to be.

Back to Top of Page ↑

Thank you very much. We'd like to open this up to questions, now. Roman?

Still have questions? We're here to help!

Questions and Answers

1. When you refer to recalibration that is close to being out of tolerance, do you recommend bringing these instruments to a nominal value each time? ➩

2. Is there a way to minimize the end-of-period reliability impact between calibration intervals? ➩

3. If you’re not implementing control charts, would you make an adjustment if the as found values are at 50% of the tolerance or greater? ➩

4. How do we know if it was performed correctly? The manufacturer provides a cal cert with data so are we safe to assume it was executed correctly? How or when could we have confidence that it was performed correctly? ➩

5. We've never really assessed suitability of our instruments, and really need to do so. And this gave us some ideas, but where can I get more information or help us better understand how to get started? ➩

6. If calibrations should be performed with a 4:1 rule for the standards UUT, what is the typical rule of thumb for the manufacturer's floor process specification and test equipment they use to take these manufacturing process measurements? ➩

1. When you refer to recalibration that is close to being out of tolerance, do you recommend bringing these instruments to a nominal value each time?

Howard: I wouldn't do that every time, and there's a couple of reasons why. Optimizing instruments is what we refer that to - as the phrase for that. And when you do that, if you have anything that is running in the control chart, and you're trending it over time, resetting the values on the instrumentation at every cal interval is going to cause you to have to restart those control charts. Or if the person running control charts isn't aware of that, it's going to cause problems with those control chart values. And they will say shift and they will have to reset.

The other thing is that if you are wanting to monitor the instrumentation to determine calibration intervals and make adjustments up or down for those intervals, again resetting or nominalizing every time doesn't allow you to see the true performance of the instrumentation. So there's actually a better way to do that. And it's not in the scope of this webinar, but it has to do with quantifying the measurement risk and knowing when to make those adjustments rather than doing it every time.

Roman: Okay. Thank you, Howard. Um, again, just as a reminder, if anyone has any questions please - please feel free to enter them in the question box.

Back to All Q&As ↑

2. Is there a way to minimize the end-of-period reliability impact between calibration intervals?

Howard: Yes, there is. If you're just relying on the cal interval adjustments to determine where that optimal interval should be to prevent having out of tolerances, then you know the problem with that is that you're assuming or hopefully you've verified that your calibrations are absolutely supporting the instrumentation where it's being used in the application and they're thorough. Tolerances are correct and all of that. It's assuming it's being used properly and not having errors introduced from the operator. So if those are assured and verified that those are not problems and you're running cal interval adjustments and getting those to the optimal level, that's one part of the battle. Then the other thing that you can do is that regardless of where you set those cal intervals, there's a term called cross-checks or using a check standard to verify mid-interval or as often as you need to - could be daily for very critical areas. It's not a full calibration. It's actually using a standard that allows you to determine if something has shifted in your instrumentation that's making decisions. And you probably want to do this in high cost/high liability processes on your product. That type of a thing will help you to catch things before they get out of control or - or to reduce the risk by doing a mid-interval and cutting the risk in half. Stopping when you see those things and sending the instrument back in for calibration, to take a full calibration of the instrument and understand if there's an out of tolerance or not. Or that may even lead to indicating that there's nothing wrong with the instrument. May have been an operator error, but the point is that you've stopped and caught the problem before it goes on for the rest of the calibration interval. So check standards are a very good way to mitigate some of the risk.

Roman: Okay - uh Howard, I think this might be a follow up to our last question, and it might make sense to you.

Back to All Q&As ↑

3. If you’re not implementing control charts, would you make an adjustment if the as found values are at 50% of the tolerance or greater?

Howard: That's one way to do it. We - what we do is we get into a tool that we've designed called PCS, and that's probability of conformance to specifications. It's a tool that allows us to measure when we're into a guard band when we're at a risk value and quantify the risk as well. What that allows, then, is for our customers to make a decision rule on what values they want to have that trigger adjustments. So that you're not optimizing every time, and you're not optimizing even at just 50% every time. It's optimizing at the right level, based on the uncertainty, based on the tolerance of the instrument, and that can vary for each and every instrument. They can make one decision rule that allows us to catch that across the board when there - and there's the guard band at the level of risk they can accept. So 50% without that tool, 50% still conservative. It's not fully optimizing. You know another way to do it is to figure out what the test uncertainty ratio is. So if it's a 4:1 you would invert that 1/4 and determine that 25% of the tolerance should be guard banded - meaning adjusted 75% or greater. If it was a 3:1 you take one over that, it would be 33% and then 67% is where you'd adjust. So you could do it that way as well.

Roman: Okay, thank you. So the question I started was...

Back to All Q&As ↑

4. How do we know if it was performed correctly? The manufacturer provides a cal cert with data so are we safe to assume it was executed correctly? How or when could we have confidence that it was performed correctly?

Howard: That gets into having some experience, and you may need to bring consulting in for some of this just as an initial review to help you understand what to look for. But if you're talking about a manufacturer's calibration, a couple of things I want to say there. One not all manufacturers are good at metrology. Some are very good at it. Some are not. When you look at a calibration procedure from a - if it’s in a manufacturer's operating manual and the steps are moving you along in a - in a fashion that says set the reading or set the standard to this value, make this adjustment. That's not a calibration - that’s - that's using the word calibration incorrectly for adjustment. And if you're doing adjustment you're not preserving that end of period reliability data. And you have no basis to go back and take a look to see if decisions about product were good or bad. So you definitely don't want to be making adjustments before you capture all the data.

The other thing is some manufacturer's procedures for calibration tend to shortcut and don't take everything that they should across the board to give you the data you need in the application where you're using it. Now optimally or ideally, it would be great to have the calibration support the test points exactly as the instrument is used. And that would be a custom cal for every instrument, but that's very costly. So for the most part, going to a default calibration that follows the test points or methodology of the manufacturer is typical of what we do. Unless that doesn't make sense. And so that - unless it doesn't make sense part, is where you may need some help in making a decision on whether it makes sense or not. If it smells funny when you're looking at it, you know if it looks funny. Then that's when you raise the question. If it seems to be a sound procedure that's following good test points, then that's fine. The tolerances are always going to default, or should default to the - what the manufacturer sets for the performance of the instrument, unless you have a reason where you're using the instrument to change those values. And then that would become a custom cal. Based on what the requirements are. Hopefully that answers the question.

Roman: Thank you. Um, the next question is...

Back to All Q&As ↑

5. We've never really assessed suitability of our instruments, and really need to do so. And this gave us some ideas, but where can I get more information or help us better understand how to get started?

Howard: :We offer a white paper on suitability that will cover the topics of things that you should consider and equipment qualification. And you're welcome to find that on our website. And then we offer consulting beyond that if you need some additional help. We've done that for a number of companies.

Roman: Okay. Thank you, Howard. And then one - it looks like one final question.

Back to All Q&As ↑

6. If calibrations should be performed with a 4:1 rule for the standards UUT, what is the typical rule of thumb for the manufacturer's floor process specification and test equipment they use to take these manufacturing process measurements?

Howard: Again, I've seen - I've seen 4:1 used in those applications. I've seen 6:1 used for more of the dimensional applications. I've seen 10:1 used in pharmaceutical processes. It's really going to be based on how much risk you can afford versus the cost to achieve those ratios. And so it's going to be a business decision. If you have questions about specific applications, or examples that you'd like to share, feel free to submit those and I'll take a look at those. We have a new portion of our website that's going to be coming up soon, called Ask the Experts. And that - it may be a good place to - a good forum for you to submit questions like that that have specific examples.

Roman: Okay, thank you Howard. There are no more questions, so that concludes our time for today. If you do have further questions and would like to get more information about TRANSCAT service offerings and the products we distribute, please feel free to contact us at 800-828-1470 or visit us on the web at TRANSCAT.com. Thanks for joining us today. We hope you found today's information useful and deployable to your processes and jobs. And have a great rest of the week. Thank you.

Howard: Thank you, everyone.

Back to All Q&As ↑

Have more questions? Contact Transcat today!